Supreme Court chief justice warns of dangers of AI in judicial work, suggests it is “always a bad idea” to cite non-existent court cases::Mr Roberts suggested there may come a time when conducting legal research without the help of AI is ‘unthinkable’

Perhaps he ought to address the overt corruption in his own court before worrying about literally anything else

They benefit from said corruption and have no incentive to address it.

It’s a good thing current supreme court justices don’t rule in favor of the highest bidder! Oh… wait.

My new-year’s wish is for the AI bubble to pop as soon as possible.

But why? And what bubble?

Not OC, but there’s definitely an AI bubble.

First of all, real “AI” doesn’t even exist yet. It’s all machine learning, which is a component of AI, but it’s not the same as AI. “AI” is really just a marketing buzzword at this point. Every company is claiming their app is “AI-powered” and most of them aren’t even close.

Secondly, “AI” seems to be where crypto was a few years ago. The bitcoin bubble popped (along with many other currencies), and so will the AI bubble. Crypto didn’t go away, nor will it, and AI isn’t going away either. However, it’s a fad right now that isn’t going to last in its current form. (This one is just my opinion.)

That’s good advice. Shame he and his colleagues didn’t follow it in 303 Creative

This is the best summary I could come up with:

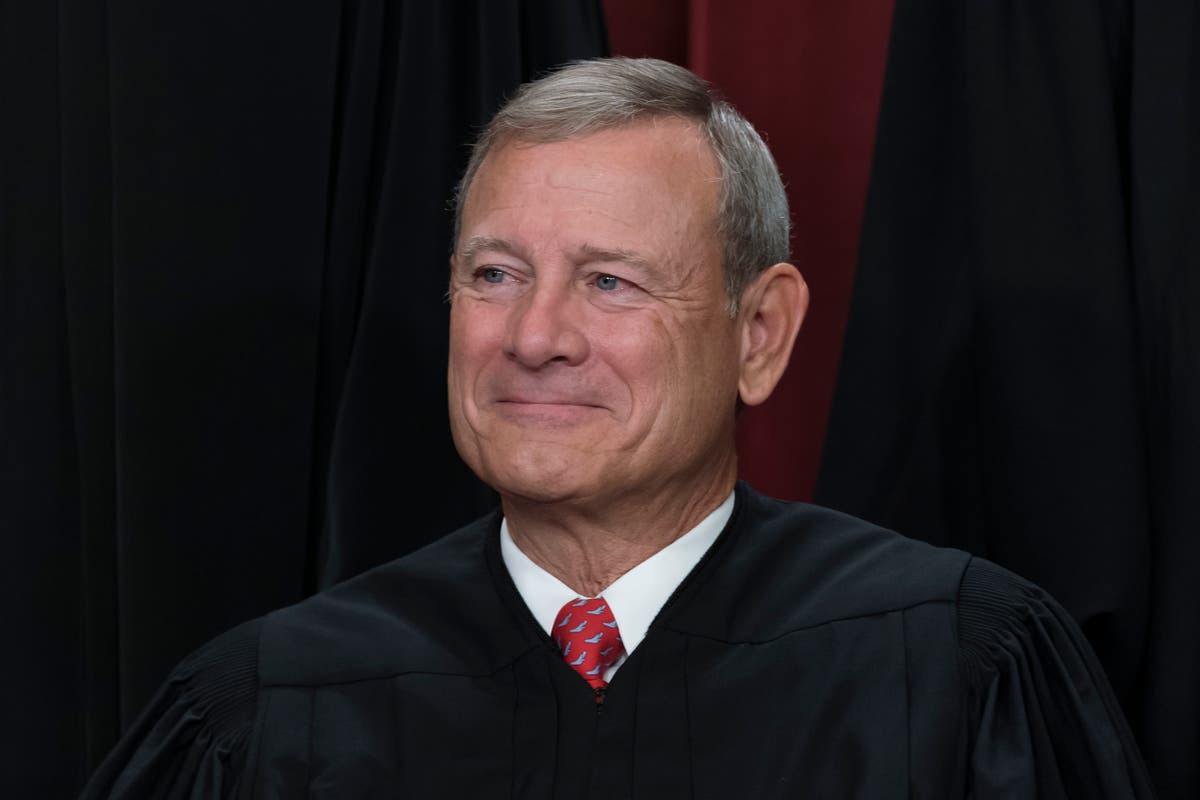

Supreme Court Chief Justice John Roberts discussed AI and its possible impact on the judicial system in a year-end report published over the weekend.

Mr Roberts acknowledged that the emerging tech was likely to play an increased role in the work of attorneys and judges, but he did not expect to be fully replaced anytime soon.

In addition to those risks, popular LLM chatbots like ChatGPT and Google’s Bard can produce false information — referred to as “hallucinations” rather than “mistakes” — which means users are rolling the dice anytime they take trust the bots without checking their work first.

Michael Cohen, Donald Trump’s former lawyer and fixer, admitted that he had used an AI to look up court case records, which he then gave as a list of citations to his legal team.

Due to the potential pitfalls of AI reliance, Mr Roberts urged legal workers to exercise “caution and humility” when relying on the chatbots for their work.

The court has proposed a rule that would require lawyers to either certify that that did not rely on AI software to draft briefs, or that a human fact-checked and edited any text generated by a chatbot.

The original article contains 421 words, the summary contains 197 words. Saved 53%. I’m a bot and I’m open source!